Intro

In the typical Monty experiment, hypothesis processing during evidence updating is a significant bottleneck. For each new observation, every location on every object model in every LM needs to be compared against and considered in a series of complex operations. Multithreading support exists for object models within an LM, though the LMs themselves are evaluated sequentially, despite being conceptually parallel operations.

Currently the entire Monty system runs on CPUs, which are designed to be able to run a variety of sequences of operations with complex branching structures. Though when it comes to parallelization, CPUs struggle to support the throughput of processing large amounts of data. Even multicore CPUs with multithreading support can support only a max of a few dozen threads in parallel. GPUs, which consist of SIMD (Single Instruction Multiple Data) processors are designed to run a more limited set of operations but on a large amount of data, supporting thousands of threads at once. Therefore, when looking to accelerate a workload on GPUs, the key is to identify ways of structuring the problem such that there are large blocks of data being processed with the same operations.

In Monty, the clear candidate for GPU acceleration is hypothesis processing for evidence updates. Conceptually during a Monty step, all LMs that are not dependent on each other can run in parallel. Within an LM, each object model can run in parallel. Within the update of a single object model, the operations performed across the hypotheses have dependencies but can largely run in parallel. Viewed as one system, a Monty step consists of the same operations processing a large number of hypotheses, which is perfect for GPU acceleration.

This led me to investigate the potential of running the hypothesis update steps for all hypotheses across all LMs as a single GPU dispatch per operation. I selected several of the hypothesis update operations, and wrote custom CUDA kernels to run these operations across all hypotheses at once.

Method

There are seven GPU kernels that I present findings for here: displacement, pose transformation, distance calculation, pose evidence, angle calculation, radius evidence max, and evidence aggregation. More details included at the end.

Refactoring the Monty codebase to use this single-dispatch approach would be challenging. Instead, as a starting proof-of-concept, I created sub-classes of the HypothesesUpdater and HypothesesDisplacer classes that add trace capture features to save the input/output data of each operation. Then in a separate, stand-alone script I profile and verify the GPU kernels against the CPU implementations.

During trace capture, I consider a unique trace to be an LM and object model pair. For each trace I record the experiment step, so that in the profiling script I can group all traces from each step to run as a batch. In these benchmark experiments each of the LMs connected to the same sensor module are being given the same observation at each step. Therefore the evidence update operations within each step can all run in parallel, but hypothesis updates from one step will affect the next step, preventing further parallelization outside the step boundary.

The standalone script performs performance profiling and verification of the batched GPU kernels against the default CPU implementations. Verification compares the results for each hypothesis in each trace between the two implementations, ensuring they are within a tolerance of 1e-5. To generate performance data for the CPU implementation, each operation is run sequentially over each trace in the step and the compute time is measured. The overall CPU runtime for a step is the sum of the individual trace runtimes within the step. This is of course not accurate to Monty’s current multithreading implementation of evaluating multiple object models within an LM. Likely, there is more CPU parallelization that could be achieved on top of this. However, for benchmarking in this standalone script, it would be hard to accurately measure multi-threading performance, so I stick to this simple performance profiling method for preliminary results.

For the GPU implementation, I stack the data from each trace within a step into single tensors, which are fed as input to the CUDA kernels. Each operation runs as a single kernel dispatch over the entire set of hypothesis data across all traces in that step. The runtime for the GPU implementation is the compute time this single dispatch takes to complete.

Benchmark Experiments

The four benchmark experiments that I have captured trace profiles from are base_config_10distinctobj_dist_agent, randrot_noise_10distinctobj_5lms_dist_agent, base_77obj_dist_agent, and randrot_noise_77obj_5lms_dist_agent. I targeted these to get a variety of object counts and LM counts for a variety of trace sizes. I don’t capture the entire experiment, as the amount of trace data would be extreme, instead I capture the first 500 traces per LM.

Caveats

I only profile the raw compute time of the kernels, not the memory operations on either side, which can be substantial. This was a straightforward way to get preliminary results comparing the compute time spent between the two approaches. In practice, there will likely be significant overhead in synchronizing the LMs for one dispatch and the memory operations involved in launching the kernel (CPU-GPU memory transfer, coalescing data from multiple LMs etc.). However, there are also many ways that we could mitigate the overhead of these factors, so it is difficult to get an accurate read on their impact at this early stage.

Further, the kernels I report here are not perfect and are still a work in progress. In particular, the custom distance kernel and pose evidence kernel still have inaccuracies to be debugged further. The custom distance kernel is accurate only to around 0.1 tolerance, while the pose evidence kernel is off by 0.5 for a subset of hypotheses. Also, I couldn’t quite crack the stacked KNN kernel yet, so I won’t report it here. On top of fixing the issues with the existing kernels, I know that we can optimize these PoC GPU kernels further. There are several parts of a few kernels that are quite inefficient, I just haven’t had the time to improve them. It is important to recognize these caveats to the numbers I present here: no LM synchronization overhead, no memory overhead, no CPU multithreading, and kernel bugs, among others.

All of these characteristics of the actual workload will have a significant impact on runtime, and I welcome any feedback about what steps we should take to investigate these problems next. For now, what I have are these raw compute numbers. Take them with a grain of salt, but they still indicate strong potential for GPU acceleration.

Results

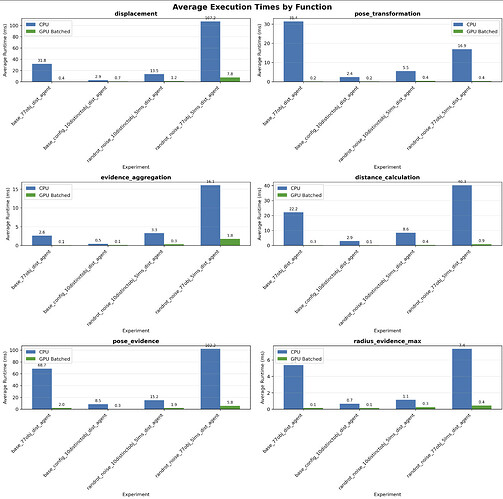

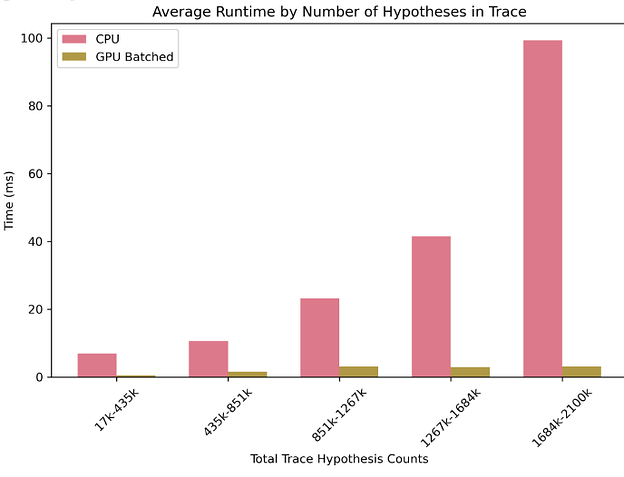

Across the board, the stacked GPU kernels outperform the sequential CPU time by a wide margin. Summing all steps for all traces across the 4 benchmark experiments I captured, the stacked GPU kernel implementation outperformed the CPU implementation by 14.4x Plot 1 below displays the average per-trace runtime in ms of each function per experiment. The speedup multiplier per-trace for each function for each experiment is shown in the following plots 2-5. Finally, plot 6 shows how the GPU and CPU implementations scale as the hypothesis count grows. There is a decent amount of variability in the runtimes, particularly for lower hypothesis counts. This is clear from Plot 1, where the relative runtimes of the different functions is quite different experiment to experiment. Generally, as the hypothesis counts grow, the runtimes become more consistent.

Plot 1: Average Per-Trace Runtime per Function per Experiment

Plot 2: Average GPU Speedup per Function for base_config_10distinctobj_dist_agent

Plot 3: Average GPU Speedup per Function for randrot_noise_10distinctobj_5lms_dist_agent

Plot 4: Average GPU Speedup per Function for base_77obj_dist_agent

Plot 5: Average GPU Speedup per Function for ranrot_noise_77obj_5lms_dist_agent

Plot 6: Average Step Runtime by Total Hypothesis Count

Environment

These experiments were performed on my Windows laptop with these Specs:

-

CPU: Intel(R) Core™ Ultra 9 185H 2.50 GHz

-

GPU: NVIDIA GeForce RTX 4070 Laptop GPU

-

RAM: 32 Gb

Conclusion

While there are significant caveats as context to these performance gains, the gains themselves are substantial and merit further investigation into GPU acceleration of Monty. We need to consider the existing CPU multithreading, the code refactoring necessary to handle the LM synchronization and data processing, and the hardware platforms these will run on. Ultimately, the impact of GPU acceleration within the Monty codebase could look very different than in this stand-alone analysis. Of course, it would be worth our time investigating CPU parallelization further. I know having a CPU backend for Monty will always be an important feature for low-power devices and constrained runtimes. However, for faster research turnaround and benchmarking against traditional deep learning approaches, a faster GPU-acceleration implementation would be valuable.

Kernel Details

The monty_cuda.displacement_stacked kernel implements the first part of the displace_hypotheses_and_compute_evidence() method of the HypothesisDisplacer, where the possible hypothesis poses are rotated by the calculated displacement of the new observation from the previous observation.

The monty_cuda.pose_transformation_stacked kernel implements the spatial_arithmetics.rotate_pose_dependent_features() function where the pose dependent features of the hypotheses are rotated.

The monty_cuda.custom_distance_stacked kernel implements the get_custom_distances() function where the distances, modulated by the point normal and curvature, are calculated between the search locations and their nearest neigbors.

The monty_cuda.pose_evidence_stacked kernel implements the _get_pose_evidence_matrix() method of the HypothesesDisplacer where the pose evidence for the nearest neighbors within the search radius are calculated. See note about monty_cuda.angle_calculation below.

The monty_cuda.angle_calculation kernel implements the get_angles_for_all_hypotheses() function that computes the angles between the hypotheses and their neighbors. Note, this calculation I actually combine with the pose_evidence_stacked kernel as the inputs directly feed into that kernel. All performance and verification data in this post includes monty_cuda.angle_calculation and monty_cuda.pose_evidence together.

The monty_cuda.radius_evidence_max_stacked kernel implements the max operation to select the highest evidence from the search radius for each hypothesis.

The monty_cuda.evidence_aggregation_stacked kernel performs the weighted sum of the current and past evidence for the hypotheses that occurs in the displace_hypotheses_and_compute_evidence() method of the HypothesesDisplacer.

This list of kernels provides a range of complexity for understanding the impact of GPU acceleration, from very simple kernels like the radius evidence max kernel to the more complex operations like the pose evidence kernel. I am still working on a stacked KNN kernel. There are also more operations that would need to be GPU-accelerated, and various conditional edge cases that I haven’t implemented. These kernels are a starting point for generating performance data for most of the core computations involved in the evidence update steps.