Hi,

I’ve been studying the Thousand Brains Theory in detail and wanted to raise a question about a possible contradiction or gap in the current model.

The theory states that:

-

Each cortical column receives input from a fixed, small patch of the sensory array. In vision, this means a specific, consistent region of the retina.

-

Each column learns complete models of objects based solely on the input it receives from that specific retinal patch.

-

Recognition comes from many cortical columns voting on the object being sensed, with each column learning independently.

Now, here’s the issue…

Thought Experiment (or Potential Study Design)

Suppose we generate a completely novel, artificial logo (something the subject has never seen before), about 1 cm² in size.

We run the following experiment:

1. Training Phase:

-

The subject looks at a fixed point on a screen (using eye-tracking to ensure no eye movement).

-

The logo is flashed briefly (just enough to register) only in the upper-left corner of their visual field - so it hits only a small, known retinal patch.

-

This ensures that only a limited number of cortical columns, those connected to that specific patch, are involved in learning the logo.

2. Test Phase:

-

Later, the same subject again fixates on the same point.

-

The same logo is now flashed on the opposite side of the visual field - such that it now activates a completely different set of retinal receptors, and thus a different set of cortical columns (those that did not see or learn the logo before).

Expected Outcome (Based on Real Human Perception)

Despite being shown in a totally new part of the retina, the subject will most likely recognize the logo instantly.

The Problem

According to the theory, the columns receiving this second stimulus:

- Have never seen the logo before.

- Should have no prior model of it.

- Should not be able to recognize it individually.

But recognition still happens. This seems to violate a key assumption of the theory: that each column builds its own model and only recognizes what it has experienced directly.

Questions for the Community / TBP Team:

-

Is this conflict something the theory has already addressed?

-

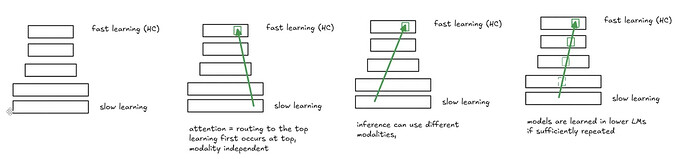

Does the Thousand Brains model allow for some form of model transfer or communication between columns that would enable this kind of recognition?

-

Or might this point to limitations in the column-specific model-learning framework, at least when applied to high-speed visual recognition?

I’d love to hear thoughts from the team or anyone deeply familiar with the theory. I’m not aiming to discredit the work - just trying to understand whether this is a known open question, or something that requires further consideration or experimental validation.

Thanks for all the incredible work you’ve done in building this model!