Jeff gives a high-level overview of the AI bus and the original ideas on which the Thousand Brains Project is based

I wrote the first whiteboard content to make it easier to read:

-

GOALS

-

How we tackled Goal #1

-

What we learned

-

Roadmap #1

-

AI BUS (Cortical Communications Protocol)

-

The birth of industry fires (Amazon, PALM)

-

Roadmap #2 AI & BVS

-

Options for what to do next

1) GOALS

- Reverse engineer the neocortex (biologically constrained).

- Be a catalyst for the age of intelligent machines.

Assumptions:

- Today’s AI is not intelligent. Future AI will be based on different principles.

- The fastest way to discover those principles is by reverse engineering the brain.

2) HOW WE TACKLED GOAL #1

- Cortex is composed of many nearly identical cortical columns.

Q1: What does a cortical column do and how does it do it?

Q2: How do columns work together?

- We didn’t know what columns did, so we identified sub-functions and tackled them one at a time:

- Prediction

- Sequence memory

- Input movement

- Uncertainty

- Prediction based on movement

- We studied other brain areas as needed:

- Thalamus, entorhinal cortex, hippocampus

- We mostly restricted our studies to columns that directly receive sensory input.

3) WHAT WE LEARNED

Q1: What does a cortical column do and how does it do it?

- Each column is like a miniature brain.

- Associated with each column is a sensor (input).

- A column knows where its sensor is relative to things in the world (reference frame).

- Therefore, as the sensor moves, the column learns models of the spatial structure of objects and environments.

Displacement

- Model is the location and orientation of chairs and their relation to each other.

- The model is independent of the current location of the sensor.

- Columns also learn sequences of displacements.

- Columns perform complex functions. We know a lot about how they do it:

- Sparsity

- Dendrites

- Minicolumns

- Laminar circuits

- Grid cells

- Vector cells

- Orientation cells

But many things are still confusing.

Q2: How do columns work together?

VOTING

- Columns share hypotheses about what object they are sensing. This explains how we can sometimes recognize something with a single glance or one grasp of hand.

- Voting works across modalities.

4) PROJECT ROADMAP #1

| SPEED ✓ | CONTINUOUS LEARNING ✓ | FAST LEARNING ? | ||||

|---|---|---|---|---|---|---|

| ↑ | ↑ | ↑ | ||||

| SPARSITY | → | DENDRITES | → | REFERENCE FRAMES | → | 1) Sensory motor learning 2) Movement 3) Multi-column voting (TBT) |

Thank you moe!

After watching the video on AI Bus and the brainstorming video, It’s amazing to see similarities in some aspects of VSLAM and CMP (AI Bus) related to Pose and Depth as well as on consensus mechanisms. I’m just trying to map some of them here:

Distributed Processing and Representation

- Visual SLAM systems often use multiple keyframes or landmarks to build a map of the environment. Each keyframe or landmark contributes to the overall understanding of the space

Integration of Sensory and Motor Information

- SLAM systems combine visual input with motion data (from odometry or IMU sensors) to create a coherent map and estimate the camera’s position

Reference Frames and Spatial Relationships

- SLAM systems create and maintain a global reference frame to relate different observations and camera positions. Again here can be a simple pose camera alone or combined with Depth camera.

Continuous Learning and Updating

- SLAM systems continuously update their map and position estimate as new information becomes available

Handling Ambiguity and Uncertainty

- Advanced SLAM systems often use probabilistic methods to handle uncertainty in measurements and feature matching. While not typically called “voting,” VSLAM systems often use consensus algorithms or optimization techniques to reconcile different observations and estimates.

Hierarchical Processing

- Many SLAM systems use hierarchical approaches, processing information at different scales or levels of abstraction

Importance of Movement

- Movement is crucial in SLAM for triangulating positions and building a comprehensive map

It would be interesting to know if there is a collaboration with this space. Thanks again for uploading.

Best,

Hey Anitha, yes you’re absolutely right, there are definitely some deep similarities between the Thousand Brains Theory (TBT) and SLAM / related methods like particle filters.

E.g. you might find the below extract from Numenta’s Lewis et al, 2019 paper interesting:

“To combine information from multiple sensory observations,

the rat could use each observation to recall the set of all

locations associated with that feature. As it moves, it could

then perform path integration to update each possible location.

Subsequent sensory observations would be used to narrow

down the set of locations and eventually disambiguate the

location. At a high level, this general strategy underlies a set of

localization algorithms from the field of robotics including Monte

Carlo/particle filter localization, multi-hypothesis Kalman filters,

and Markov localization (Thrun et al., 2005).”

This connection points to a deep relationship between the objectives that both robotics/AI and evolution are trying to solve. Methods like SLAM emerged in robotics to enable navigation in environments, and the hippocampal complex evolved in organisms likely for a similar purpose - i.e. in order to represent the location of an animal in space. One of the arguments of the TBT is that the same spatial processing that supported representing environments was compressed into the 6-layer structure of cortical columns, and then replicated throughout the neocortex to support modelling all concepts with reference frames, not just environments.

So in some ways, you can definitely think of the Thousand-Brains Project as leveraging concepts similar to SLAM or particle filtering to model all structures in the world (including abstract spaces), rather than just environments. A key element is the interaction of many, semi-independent modelling units in this task, as well as how they communicate (in addition to other elements).

One paper that you might find interesting is this one from Browatzki et al, 2014, where they use particle filters to perform object recognition. If you are aware of other papers that have used particle filters, SLAM, or related methods to perform object class and pose recognition (rather than environment localization), please feel free to share them, we’d definitely be interested to check them out.

Thank you for sharing those ideas! I am excited to see what comes next regarding the recent videos, the source code and the interactions with the community here. I already have a few comments & questions. Sorry if it is too long. I bet many of those points will be addressed in forthcoming videos. If so, I can wait!

1/ AI Bus / Cortical Communication Protocol (CCP)

Laying out the big picture about how learning modules (LM) interact between themselves is very useful. The fact that the outputs of a LM should have the same nature/structure that its inputs if we want to be able to chain them hierarchically is also a key constraint of the problem.

Can we see your CCP as a global buffer any LM can read from and write to? Or is it more like every LM can broadcast & sample what they want to?

2/ CCP and hierarchy

Do you consider a global common AI Bus for the whole cortex? (it sounded like it in the first video where hippocampus is on the same bus than the sensory LM, but it was probably for simplicity i guess). Or an AI Bus for each cortical areas, meaning dozens or even a hundred of such “local” AI Bus, with maybe some global AI Bus on top of those?

3/ Locality & Topography in the CCP

When modeling interactions between different LMs, do you make a difference between local lateral connections vs long-range horizontal connections?

4/ WHERE vs WHAT columns

“But there’s an idea that these are like cortical columns. we’re, now starting to call them modules because we think they actually might be more than one column. They might be like a where column or what column. So it’s a little bit confusing about that. So from a machine learning point of view, I might say it’s module, because I don’t think there’s a direct correspondence now with the cortical columns that there could be like, two different columns combined with each other. So I’m going to use that language interchangeably, unfortunately.

I am a bit confused by this idea. Do you have some biological clues that guided you towards this separation between WHAT & WHERE columns that are grouped into modules? This vocabulary makes me think about the WHAT vs WHERE pathways for vision, but this is at a another level (both the WHAT and WHERE pathways are made up of thousands of different LM).

Related to this question: Cortical columns in WHERE pathways seem to have object IDs corresponding to locations. What link with the common reference frame / pose relative to the body of the AI Bus?

5/ Ambiguity through hierarchy

I think I saw or I read somewhere that ambiguity through several hypotheses is only conveyed laterally on the AI Bus and not to next level in hierarchy? (I cannot find the source again). Is it correct? Would it means that the inputs from the sensors could not convey ambiguity in this sense?

6/ Number of different objects a single LM can learn

Do you think a LM in V1 could have the concept of a coffee cup? If there is this level of details at the first level of hierarchy, the number of different objects a single LM can learn LM looks huge! I am more inclined to think that each of the ~1000 LM in human V1 (I consider pinwheels in human/primate/cat V1 as a single LM) only stores a few dozens of concepts/objects tightly related to edge orientation/curvature/length/spread at a pose in a specific reference frame. I believe in the power of composition at the other hierarchical levels to represent complex objects. Would you disagree with this view?

7/ Object representation inside a LM

I like the idea that an object is defined by the structural relations between its subparts (sounds like category theory could help us here, but I don’t know enough): tell me how your subparts interact and I will guess who you are. When talking about physical objects, it is easy to think about the physical transformations to change reference frames. Those transformations could be framed as analogies: A* is to A what B* is to B. If you add the fact the analogies are at the core of language as Hofstadter has decribed, then we have a way to thinking about language-related LMs in higher level of hierarchy in the same way we think about physical 3D reference frame for objects. Curious to know what you think about this.

I’m eager to dive into the code to see if and how I can contribute.

Hi Matthieu, welcome to the forums!

- (also 2-3) Cortical Messaging Protocol

Great questions, this will probably become clearer in future videos, but I think one thing to highlight is that we have moved away from using the “AI bus” terminology, and simply refer to the “Cortical Messaging Protocol”. This is the CCP that that messages within the cortex must follow.

As such, there isn’t a single global CMP signal, but rather any learning module can broadcast or receive a CMP signal. Exactly where a learning module will receive CMPs from, as well as send them to, will depend on the connectivity in the network. But e.g. there may be some LMs that are connected more widely, resulting in a more globally broadcast CMP. This relates to your 3rd question as well - while we currently don’t have that many LMs and the requirement for distinguishing global vs. local connectivity isn’t that important (e.g. small-world style connectivity), that will definitely come up in the future.

- What vs Where

That’s a fair question, but I wouldn’t worry too much about that comment, in general now we do refer to the learning-modules as having a roughly 1:1 correspondence to cortical columns in the brain. However the what-vs-where pathway, and the significance of it for the design of a Thousand-Brains system is still an open question that we periodically discuss. One thing we’re quite confident about is that LMs in a where-style pathway will be particularly important for coordinating movements.

- Ambiguity

This is also an open question - in general at the moment we pass the most likely representation up through the hierarchy, but depending on the representational format (e.g. SDRs), there might be a union of hypotheses communicated. For lateral connections that support voting however, it is especially important that multiple hypotheses are communicated.

- Objects in V1

One important prediction of the Thousand Brains Theory is that the huge number of neurons and the complex columnar structure found throughout brain regions including V1 does a lot more than simply identifying low-level features / high-frequency statistics like edges or colors.

However we don’t know exactly what the nature of the “whole objects” in V1 would be. They would definitely be something more complex than a simple edge, and they will be a statistically repeating structure in the world that is cohesive in its representation over time. This might end up being things that we might classically think of as “sub-objects”/object-components (like the parts of a leaf), or more independent objects like alphabetical letters or entire leaves.

It’s also important to note that compositionality and hierarchy is still very important in this view - it’s just that a single column (without hierarchy) can do a surprising amount, more than what you would predict from e.g. 1-2 layers of an artificial-neural-network style architecture.

- Abstract spaces and language

Yes our current view is that anything that can be represented in 3-dimensions or less can easily be visualized by humans, and therefore structured using the same principles as those that model the physical world. This includes abstract spaces like the concept of democracy, a family tree, or language. This is also why concepts in higher dimensions (e.g. quaternions) are so difficult for humans to visualize.

Hope that helps, looking forward to continuing the discussion as we release more material!

Thanks a lot Niels for your detailed answer!

One important prediction of the Thousand Brains Theory is that the huge number of neurons and the complex columnar structure found throughout brain regions including V1 does a lot more than simply identifying low-level features / high-frequency statistics like edges or colors.

To precise my thinking, I am not infering that a V1 column is simply identifying low-level features / high-frequency statistics like edges or colors. Rather than just identifying, I would say that it predicts the low-level features that will be sensed during the next iteration. This prediction does not seem that straightforward (and may explain the huge number of neurons in a single column) because it involves modeling at least three factors:

- Temporal sequences: a column can expect to see a specific edge at time T if it was sensing a given edge at time T-1 (could be implemented with your Temporal Memory algorithm). For V1 columns, they would be biaised to see the same edge if no lateral information is provided, but other columns may heavily rely on this mechanism to learn sequence. (~ receptive field inputs)

- Moving features: a column can expect to see a specific moving edge at time T if this moving edge was sensed by a nearby V1 column at time T-1 and no sensor movement is expected. It has to learn its relationship with its neighbouring columns. (~ local lateral connections)

- Moving sensors: a column can expect to see a specific edge at time T if this moving edge was sensed by a distant V1 column at time T-1 corresponding to the efference copy of the forthcoming occular saccade. This also has to be learned. (~ long-distance lateral connections)

I agree that a “dumber” column that can only predict a few dozens of objects/concepts sounds restrictive. On the other hand, it allows to use SOM-like algorithms that facilitate learning and clustering with nice properties (continuity, fuzziness, stability, see my comment here 2021/11 - Continued Discussion of the Requirements of Monty Modules - #4 by mthiboust). It’s a trade-off I found interesting to make, but I’m open to revisiting my thinking based on your progress.

Hi Niels,

Thanks for sharing the paper.

The extract from Numenta’s Lewis et al. paper effectively illustrates the commonalities in approach, particularly in handling multiple sensory inputs and location updating. It’s fascinating to observe how both evolutionary processes and robotics have arrived at similar solutions for navigation and spatial representation.

Regarding papers that apply particle filters or SLAM-like methods to object recognition, here are a couple of additional references that may be of interest to you:

Deng et al. (2019) introduced PoseRBPF, a Rao-Blackwellized particle filter approach for 6D object pose tracking, which efficiently decouples 3D rotation and translation estimation.

p49.pdf

and

Best,

Anitha

Hi All,

As I went back and forth from videos to papers to TBP overview, I just wanted to confirm the terminology and it’s evolution:

Is it correct to say that

AI Bus => Cortical Messaging Protocol => Common Communication Protocol

are all referring to the same thing, and has evolved over the years? Or am I misinterpreting something?

Sorry for the naivety of my question ![]()

Thanks,

Anitha

hi @mirroredkube Yes, they all refer to the same concept. The name we settled on is Cortical Messaging Protocol. (CMP)

Thanks for the responses Matthieu and Anitha, these kinds of discussions were exactly why we were excited to open-source the Thousand Brains!

@ mthiboust:

Yes so prediction is definitely central to all of this, and so I think we are saying something similar. It might just help if I paraphrase our basic model at a high level.

To recap, we assume the edges etc. which are often detected experimentally in V1 correspond to the feature input (layer 4 in our model). Each column is integrating movement in L6, and building a more stable representation of a larger object in L3 (i.e. larger than the receptive field in L4). L3’s lateral connections support voting, i.e. other columns assisting each-other’s predictions. The reason that we believe it operates like this is:

i) for a given column to predict a feature (L4) at the next time point, it can do this much better if it integrates movement, rather than just predicting the same thing, or predicting based on a temporal sequence - bearing in mind that we can make arbitrary movements and don’t follow a fixed sequence when we do things like saccading. Reference frames enable predicting a particular feature, given a particular movement - if the column can do this, then it has built a model of the object.

ii) For a given column to predict what another column will see, then it is much better if they do this using more stable representations, i.e. the representation in L3, rather than something that will change moment to moment, i.e. the representation in L4. Fortunately this fits well with the anatomy.

Hope that helps at least in terms of the language we tend to use at Numenta/TBP.

With that clarified, I agree that predictions become even more interesting when things in the external world are moving independently, and that clearly this provides additional information for how one column can predict the representation in another. The concept of representing moving objects in the external world is an active area of research and brainstorming for us, so nothing that we have a solid grasp on yet.

It’s super fascinating! A popular book amongst several of us on the team is “A Brief History of Intelligence” by Matthew Bennett (A Brief History of Intelligence - Max Bennett). If you’re interested in the evolution of intelligence and haven’t already come across it, I can highly recommend checking it out.

And that’s brilliant, thanks very much for sharing those papers. We’ll check them out.

Good morning all! New to the community so currently catching up with all the discussions and wanted to probe more about the what/where topic.

If the “what” module is identifying, isn’t it always “in relation to”? And as such, has “where” dependancies that are attached to it?

For example, a ceramic bowl shaped object could be identified as a a cup or a bowl. A cup and a bowl are analogous to each other with the relationship

“consumption vessel”, or “container”.

The nuance within relationship is what further differentiates the two.

And is the classification of an object being a cup versus a bowl, a function of the context of which that object is utilised? (Either live context or memory context)

For example, we have the context markers on the object itself such as diameter, isHandle? Etc.

We also have environmental context markers (cutlery? Straws? Fluids? Solids?)

We have time context markers (breakfast time/ lunch…am/pm)

So with that in mind, doesn’t the requirement of the “where” always persist?

And vice versa, for one to effectively calculate/identify “where”, might that be dependant on the live understanding of all of the “what’s” in the context frame ?

As such, could it be that a what/where meta module has to persist?

Best

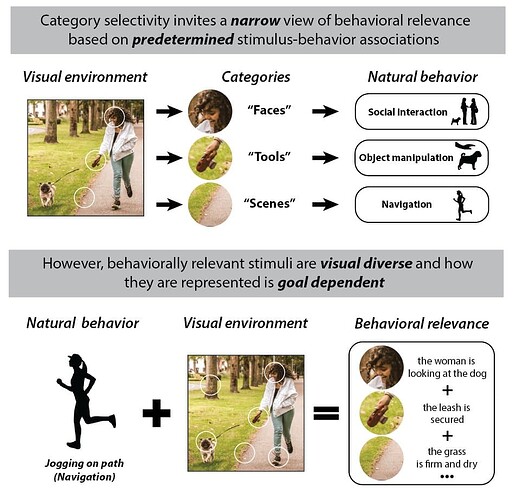

Not sure I understand your questions, but it makes me think about this recent paper “Rethinking category-selectivity in human visual cortex”. Maybe this will help?

In this perspective paper, we argue it’s time to move beyond the framework of category selectivity (while building on its insights) towards one that that prioritizes the behavioral relevance of visual properties in real-world environments

Thank you so much for linking this paper. It articulates my previous post far greater than my attempt.

That is what I had in mind with regards to needing “where” + “why” modules working together where:

Where = natural behaviour module

Why = visual environment module

Both combined = behavioural relevance.

I’m out currently so haven’t been able to read the full paper so forgive my reducing it.

Hi Josh, thanks for the follow-up questions. I’m not sure how well this answers what you are asking, but I think it’s worth clarifying that the terms “what” vs. “where” can be, at least in my opinion, quite misleading, as clearly spatial computation is important in throughout the brain, and even within cortical columns. This is central to the TBT claim that every column leverages a reference frame. Even the alternative naming of the ventral and dorsal streams as a “perception” vs. “action” stream can be misleading, because again all columns have motor projections, e.g. eye control mediated by columns in the ventral stream projecting to the superior colliculus.

However one distinction that might exist (at least in the brain), is the following: for the columns to meaningfully communicate spatial information with one another, there needs to be some common reference frame. Within a column, the spatial representation is object-centric, but this common reference frame comes into play when different columns interact.

One choice is some form of body-centric coordinates, which is likely at play in the dorsal stream, and would explain its importance for directing complex motor actions, as in the classic Milner and Goodale study that spawned the two-stream hypothesis (Separate visual pathways for perception and action - PubMed).

An alternative choice is an “allocentric” reference frame, which could be some temporary landmark in the environment, such as the corners of a monitor, or a prominent object in a room.

So far the between-column computations in Monty, such as voting, have made use of an ego/body-centric shared coordinate system. However, this might change in the future, where motor coordination would benefit from egocentric coordinates, and reasoning about object interactions might benefit from allocentric coordinates.

Hope that helps.