I have seen quite a lot of the research meetings and Monty Overviews, but am still unable to find any references to any functional components of Monty that resembles similar to Hippocampal-Entorhinal Complex. the HC-EC complex plays vital roles in short term memory storage, Global Position System (Grid Cell Mechanism in Entorhinal Complex) and also has the ability to store what importance of specific temporal sequence over others and other functions. I believe this plays key functionality in vision (object detection). Speech Interpretation, touch sensation interpretation, and even while loading Object Reference Frames and global reference frames alike. Is this being implemented in Monty? Or did the team decide to incorporate this functionality somehow within the Learning Modules itself?

I’m curious to know the teams plans on this too. Though I do recall @vclay mentioning that they were actively working towards replicating hippocampal function into the TBP framework.

Being one of their goals seems to be that of improving model efficiency, I would imagine they’d be looking at some sort of dedicated structure, a kind of Tolman-Eichenbaum machine, as opposed to some sort of ‘self-attention’ type approach. (given the computational costs associated there.)

Great question @deric.pinto , yes we definitely believe that the hippocampal-entorhinal complex is important, and we believe we can capture its behavior as a form of learning module.

In particular, part of the underlying Thousand Brains Theory is that the hippocampal formation evolved to enable spatial navigation in animals (i.e. grid cells for reference frames of the environment) and rapid episodic memory. Over time, evolution replicated this approach of modeling the world with reference frames in the form of cortical columns, which were then replicated throughout the neocortex for more general purposes.

As such, we believe that the core computations that a cortical column performs are similar to the hippocampal formation. Since LMs are designed to capture the former, they also implement the core capabilities of the latter. The key difference we believe is the time-scale over which learning happens, with the hippocampal complex laying down new information much more rapidly. In Monty, this would be implemented as a high-level LM that builds new information extremely quickly.

An aside on biology and speed of learning

It’s worth noting that the speed of learning in LMs is an instance where Monty might be “super-human”, in that within computers, it is very easy to rapidly build arbitrary, new associations, while this is challenging for biology. Evolution has required innovations such as silent synapses and an excess of synapses in the hippocampal complex. This degree of neural hardware to support rapid learning cannot be easily replicated in the compact space of a cortical column, so in biology the latter will always learn much more slowly, and very rapid learning is a more specialized domain of the hippocampal formation.

In the case of Monty, we might choose to enable most LMs to have fairly rapid learning, although we believe there are other reasons that we might restrict this, such as ensuring that low-level representations do not drift (change) too rapidly with respect to high-level ones.

Let me know if i can clarify anything further.

The parts of incorporating rapid learning directly within the Learning Modules makes sense to me , but that does not seem to be the only purpose of the Hippocampus , am curious to know how the episodic memory is being incorporated into the Learning Modules , for instance when the Hippocampus decides to replay a particular episodic instance, all the sensory networks related to the episode start firing regardless of modalities for ex: glimpses of vision , with the sense of smell, even auditory and the associated feelings in the amydala everything starts firing at the same instance making the organism aware of that episode in a very deep experience of the memory., this seems like shared storage model to store all the combined senses in one place for that sensation , am bit skeptical if incorporating this directly into learning modules might not work , as this is a shared learning experience and will need an isolated component that will have to collect this from all the learning modules during such episode building process. does that make sense ?

Hmmmm… Very interesting, though admittedly, not the response I was expecting.

Thinking back on Mr. Hawkins books now, it did seem as though he wanted to approach replicating the cortex absent the limbic system. Or at least that what his writing led me to think. So the approach here makes a lot of sense in that regard.

I do share some of @deric.pinto’s questions, however. When I think of hippocampal-entorhinal complex function, I think of:

- The time-wise indexing of ‘memory’ across cortical space

- Pattern separation of “whole” memories

- Pattern completion

This is probably just me being dense, but how would you achieve these things without a specialized/central structure? Wouldn’t you eventually run into the same kinds of issues as transformers do with self-attention?

Thanks for the follow-up questions @deric.pinto and @HumbleTraveller

Re. the nature of episodic memory and time

One important aspect that we believe every column models is the behavior of objects, in other words how they change over time. Some objects follow a very stereotyped sequence of state changes as a function of time (e.g. what a bird looks like when it is flying, the melody of a song), whereas others are more conditional on the specifics at any given moment, such as intervening actions (e.g. how a napkin looks when folded in different ways). Regardless, the idea of modelling object behavior as a sequence of states is not very different from episodic memories as a sequence of events. As such, we think that the implementation of the former in learning modules may be sufficient for episodic memory.

Re. the recovery of sensations with memory recall

This is definitely an important aspect. One way to conceptualize this is the hippocampus operating as an LM that is near the top of the hierarchy, as LMs corresponding to working memory in the pre-frontal cortex also would be. As such, a hippocampus-LM can use top-down influence to recover representations in diverse regions of cortex. This is thus not very different from the top-down influence that we are implementing in (cortical column) LMs, where a representation at an abstract level of the hierarchy can recover a low-level representation. For example, observing a particular coffee mug might predict that a logo will be observed on its surface.

You’re right that you would need something like the amygdala to invoke more emotional memories (in turn influencing a virtual hypothalamus etc.), but this is not something we intend to incorporate.

Re. pattern separation and pattern completion

This is something we believe that each column will be doing, namely that when classifying objects, there is both a recognition of specific instances (pattern separation, e.g. this is my favorite mug), and a recognition of broad classes (pattern completion, e.g. this is a mug, or even more generally, this is a vessel for fluids). We aren’t entirely clear on the details, but we think these would be represented separately in layers L2/L3 for communication downstream.

High-level

I should note this is an area of research, so it may definitely be the case that we need to make additional changes to fully capture the hippocampal complex, and in particular unique properties it may have. However, our current hope is to do most of that with the elements found in LMs. Hope that makes sense, happy to provide further clarification (including on the question about transformer self-attention if you are able to elaborate on your concern @HumbleTraveller ).

Thank you for the detailed response, @nleadholm.

Approaching the hippocampal complex as a sort of top-level node in the LM heirchy makes a lot of sense. If you can pull off doing that efficiently, I’d go so far as to call the implementation beautiful. Though I do have concerns regarding the processing speed when working at scale.

My reference to Transformer’s self-attention is namely in its O(n^2) runtime relative to its input length.

While not a direct one-to-one homologue, in a sense, transformers are emulating cortico-thalamic looping via their self-attention mechanisms. Normally this works fine. However, due to that quadratic runtime as the model’s sequence length increases, its time-to-output increases quadratically. Its essentially just a giant computational bottleneck.

The potential issue I see with TBP, and my main concern, is you’ll be emulating not only attential mechanisms, but global location/coordinate mechanisms too (e.g. emulating the EC-hippocampal areas). And that each of these will in turn run into the same kind of bottlenecking limitations as Transformers do with self-attention.

As an example, if we look at those recent videos you guys’ just put out (the ones regarding increasing the efficieny of LMs at runtime) we see that you’re running into issues at recognizing objects, like something to the order of 1 - 86 minutes to identify a coffee mug (if I recall correctly).

By itself, that lone object obviously wouldnt make it to the level of “hippocample LM,” as it itself would be a subset of a much more complex scene.

My worry then is that as you begin to scale up the throughput of information being processed by the TBP framwork, its behaivoral-response times will become increasingly slowed. Does this make sense? Not sure if I’m wording things correctly…

**Also, admittedly, I am a layman when it comes to both ML and neurosci. These sources may do a better job elaboriting some of my concerns as it pertains to this particular problem:

- ON THE COMPUTATIONAL COMPLEXITY OF SELF-ATTENTION

- Attention in Transformers, visually explained (3blue1brown video)

EDIT: Apologies. I re-watched that video where I got the 1 - 86 minute time-to-recognition quote (https://www.youtube.com/watch?v=JVz0Km98hLo&t=2295s). Turns out, that was when the LMs were selecting an object from out of a scene of 77 objects. But even still, I think the above example is still a valid concern.

Thanks @HumbleTraveller for clarifying that. While some researchers have drawn connections between transformers and the hippocampal complex, to my mind the best mapping of self-attention onto Monty is to voting, discussed in this video on transformers vs. Monty.

Voting and self-attention are similar in the sense that they are potentially an all-to-all operation between a set of representations, with as you say O(n^2) complexity in the number of representations. However, we don’t expect to actually have/need all-to-all connectivity in Monty (neither would it be found in the brain), but rather these lateral, voting type-connections would be much sparser, significantly reducing the complexity.

More generally, we’re not too worried about the computational complexity of Monty at the moment. The slow wall-clock time primarily comes down to the implementation of Monty not being highly optimized vs. e.g. deep neural networks on GPUs, but when we look at the actual number of floating-point operations (amount of computation) that Monty needs, it compares very favorably to deep neural networks. There are various things, from neuromorphic hardware, to the utilization of low-precision bit-based representations that should hopefully help when we eventually want to make an effort at scaling Monty up.

Hope that answers your concerns about self-attention, but let me know if I can elaborate.

I’ve been thinking more about this on-and-off over the last day or so. And I think I’m starting to come around to your perspective on it. My concerns are still there, but I’m guessing favorable benchmarks will dissuade those.

I’ll need to give that video you suggested a watch!

Also, at risk of deviating away from the original post, I have to ask: do you guys anticipate incorporating internal value structures into the TBP framework (think reward-modelling and goal-state generation, that sort of thing)? Or would you prefer it be driven by externally provided drives (e.g. tasks given to it by a human)? What do you suppose would be the advantages/disadvantages to each approach?

Edit: about 45 minutes into the video and I just have to say that Jeff’s insistent need to understand everything makes me smile somewhat.

Also, this is SUPER pedantic of me, but in the vid you guys are getting into specifics on transformer token encodings, discussing whether it encodes to individual characters/words.

I could be wrong, but to my understanding, modern LLMs don’t quite encode that way (though they DO still follow similar probability distribution principals). They way it’s known to me is that they encode byte-wise, to the tune of about four bytes, on average (or about 75% of a word). So essentially, they carve linguistic space up into chunks, then store those chunks in memory, as tokens.

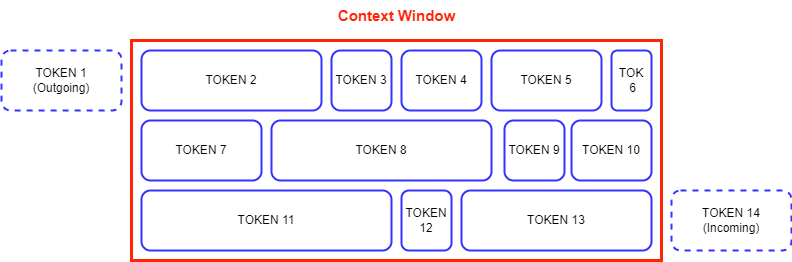

We can actually take this information and by using a given models publicly available context window size, calculate it’s memory horizon.

For example, if we look at Anthropic’s Claude, we see that it’s context window is about 200k tokens (this is back in March 2024). Then we can simply multiply our 4 bytes against that 200,000 to get a memory horizon of 800 kilobytes.

We can confirm this by generating a text file of size 800KB (actually slightly less due to some buffer memory constraints), then by uploading it into Claude. We ended up using Herbert’s Dune to do this…

Now the fun part is when we output Claudes decoded token key-pairs. We’re returned with this:

As we can see, the key-pairs aren’t all fixed-lenth. Some are as small as 2 bytes, others as large as 10. This let’s us infer that Anthropic is likely using some form of lossy encoding method to tokenize it’s inputs. So Claudes working memory isn’t so much uniform…

…As it is variable:

It was this lossy encoding behavior that originally led me view a transformers self-attention mechanisms as analogous to cortico-thalamic processing. Or rather, to the working memory constraints as seen in humans.

But anyhow, I’m rambling now and I realize this is all stuff you’re probably well aware of. So I’m going to show myself back to that video now… Have a good night!

addressed a bunch of my concerns , great explanation @nleadholm.

couple of follow-up;s though :

you did mention that having some sort of hippocampal complex equivalent of system somewhere in higher level of the hierarchy could help in the retrieval of pattern more easily , Is there any biological proof to this or is it just more of a hypothesis the team has predicted how it can be achieved ?

one more question that am still having trouble understand is the concept of Path Integration for instance when we move across rooms in our hours the HC-EC complex keeps every organized into one ref. frame as house and we can manipulate the frame by moving across different rooms in the house , and it is exactly the same concept with any object for instance , a cup , car , computer or any other object or concept ( like democracy or currency ) . We use path Integration at every point of thinking to analyze the state of the system with reference to us., this is a big hint to me that HC-EC complex is a key component that works with every region of cortex right from V1 , V2 to IV in visual cortex to similar regions in auditory and somato sensory cortices. would that be counter intuitive to assume HC-EC complex would only work with higher layers of cortex or is the team separating out some functionality of entorhinal cortex directly onto the cortical column for easy implementation. ?

Hi @deric.pinto thanks for your follow-up questions, I would say it is more of an observation based on the utility that the hippocampal complex provides.

Re. your second question, if I’ve understood correctly: it was hypothesized in the Thousand Brains Theory that each cortical column is capable of path integration in object-centric reference frames, independent of the entorhinal complex. So while they are similar concepts, it is not the actual grid cells in the entorhinal complex that are needed for path integration in e.g. V1 cortical columns.

Hope that makes sense, otherwise happy to clarify further.